Welcome to Wild Plant 3D

We introduce Scan2Mesh, a novel data-driven generative approach which transforms an unstructured and potentially incomplete range scan into a structured 3D mesh representation. The main contribution of this work is a generative neural network architecture whose input is a range scan of a 3D object and whose output is an indexed face set conditioned on the input scan. In order to generate a 3D mesh as a set of vertices and face indices, the generative model builds on a series of proxy losses for vertices, edges, and faces. At each stage, we realize a one-to-one discrete mapping between the predicted and ground truth data points with a combination of convolutional- and graph neural network architectures. This enables our algorithm to predict a compact mesh representation similar to those created through manual artist effort using 3D modeling software. Our generated mesh results thus produce sharper, cleaner meshes with a fundamentally different structure from those generated through implicit functions, a first step in bridging the gap towards artist-created CAD models.

3D meshes are one of the most popular representations used to create and design 3D surfaces, from across content creation for movies and video games to architectural and mechanical design modeling. These mesh or CAD models are handcrafted by artists, often inspired by or mimicking real-world objects and scenes through expensive, significantly tedious manual effort. Our aim is to develop a generative model for such 3D mesh representations; that is, a mesh model described as an indexed face set: a set of vertices as 3D positions, and a set of faces which index into the vertices to describe the 3D surface of the model. In this way, we can begin to learn to generate 3D models similar to the handcrafted content creation process.

The nature of these 3D meshes, structured but irregular, make them very difficult to generate. In particular, with the burgeoning direction of generative deep learning approaches for 3D model creation and completion, the irregularity of mesh structures provides a significant challenge, as these approaches are largely designed for regular grids. Thus, work in the direction of generating 3D models predominantly relies on the use of implicit functions stored in regular volumetric grids, for instance the popular truncated signed distance field representation. Here, a mesh representation can be extracted at the isosurface of the implicit function through Marching Cubes; however, this uniformly-sampled, unwieldy triangle soup output remains fundamentally different from 3D meshes in video games or other artist-created mesh/CAD content.

Rather than generate 3D mesh models extracted from regular volumetric grids, we instead take inspiration from 3D models that have been hand-modeled, that is, compact CAD-like mesh representations. Thus, we propose a novel approach, Scan2Mesh, which constructs a generative formulation for producing a mesh as a lightweight indexed face set, and demonstrate our approach to generate complete 3D mesh models conditioned on noisy, partial range scans. Our approach is the first, to the best of our knowledge, to leverage deep learning to fully generate an explicit 3D mesh structure. From an input partial scan, we employ a graph neural network based approach to jointly predict mesh vertex positions as well as edge connectivity; this joint optimization enables reliable vertex generation for a final mesh structure. From these vertex and edge predictions, interpreting them as a graph, we construct the corresponding dual graph, with potentially valid mesh faces as dual graph vertices, from which we then predict mesh faces. These tightly coupled predictions of mesh vertices along with edge and face structure enable effective transformation of incomplete, noisy object scans to complete, compact 3D mesh models. Our generated meshes are cleaner and sharper, while maintaining fundamentally different structure from those generated through implicit functions; we believe this is a first step to bridging the gap towards artistcreated CAD models

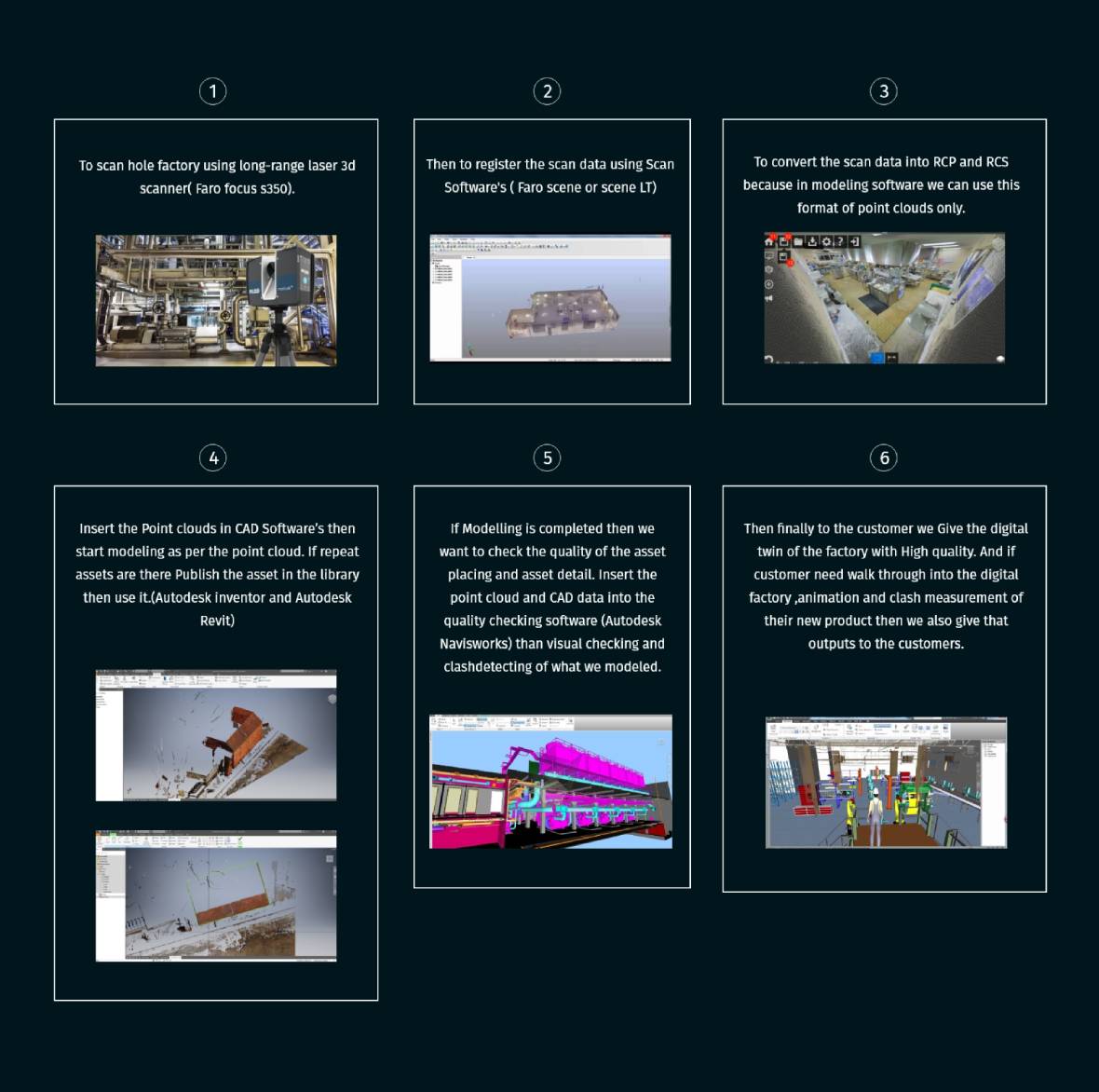

P3DM offers end to end solutions to cater